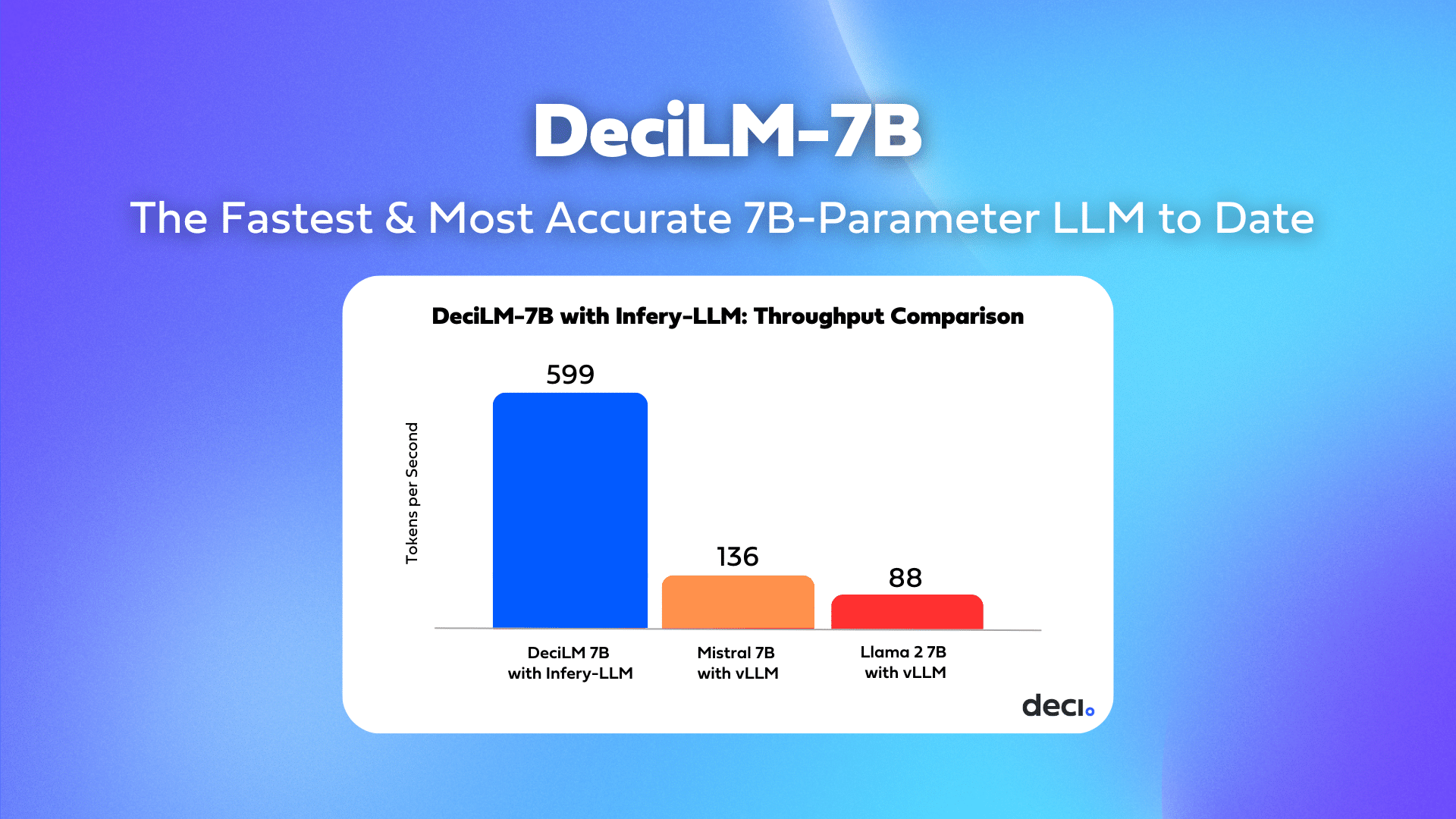

"DeciLM 7B is the newest version of OpenAI's large language model. It is the fastest and most accurate 7B-sized language model to date. The model was trained on 15 billion tokens, which is 4X larger than the previous DeciLM 6B and is four times faster in inference.\n\nThe new DeciLM 7B model is a transformer network that uses self-attention to learn long-range dependencies. This allows the model to capture complex relationships between words in order to understand language better. The model was trained on natural language processing (NLP) tasks such as question answering, text classification, and summarization.\n\nThe results from a variety of NLP tasks show that DeciLM 7B outperforms other large language models. For example, DeciLM 7B achieved an average improvement of 11.4% on the GLUE benchmark compared to the previous DeciLM 6B. Additionally, the model also demonstrated improved results on downstream tasks like question answering, sentiment analysis, and sentence completion.\n\nThe model is also more efficient than its predecessors due to its ability to compress information better. The model has fewer layers than the previous versions but still provides high accuracy. As a result, it can run inference faster with fewer resources.\n\nOverall, DeciLM 7B is the fastest and most accurate 7B-sized language model to date. Its performance on various NLP tasks shows that it can capture complexities in language effectively. Furthermore, it is more efficient than the previous models, allowing for faster inference with fewer resources." # Description used for search engine.

Read more here: External Link