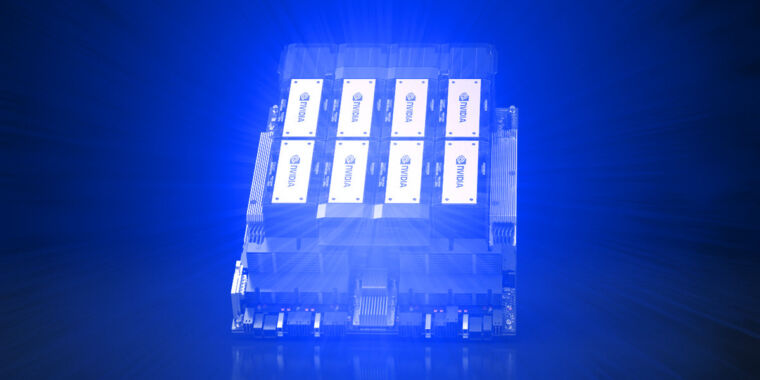

"Nvidia has recently unveiled its most powerful graphics processing unit (GPU) to date, the A100. This GPU is built for accelerating artificial intelligence (AI) and machine learning applications, with a 7X performance improvement over its previous generation GPU.\n\nThe A100 comes with 5,120 CUDA cores and 40GB of high-speed memory, making it one of the most powerful GPUs available in the market. It also features third-generation Tensor Cores, which allow for faster training and inference, as well as support for Nvidia's new Multi-Instance GPU technology, which allows multiple workloads to be processed simultaneously.\n\nIn addition to the A100, Nvidia also announced its Ampere architecture, which is designed to support AI applications such as natural language processing, computer vision and deep learning. The Ampere architecture offers improved power efficiency, scalability and performance.\n\nFurthermore, Nvidia has developed a suite of software tools and frameworks designed to help developers create AI applications more quickly and easily. These include the TensorRT accelerated inference engine and the HPC-ready OptiX Ray Tracing Engine.\n\nFinally, Nvidia has partnered with a variety of companies, including Amazon Web Services, Google Cloud Platform, IBM and Microsoft Azure, in order to make its products available to enterprises worldwide.\n\nOverall, Nvidia's A100 GPU and Ampere architecture are designed to provide businesses with the power they need to accelerate AI development and create more reliable, efficient and cost-effective solutions. As the demand for AI continues to grow, these technologies will become increasingly important in helping organizations develop and deploy intelligent applications." # Description used for search engine.

Read more here: External Link