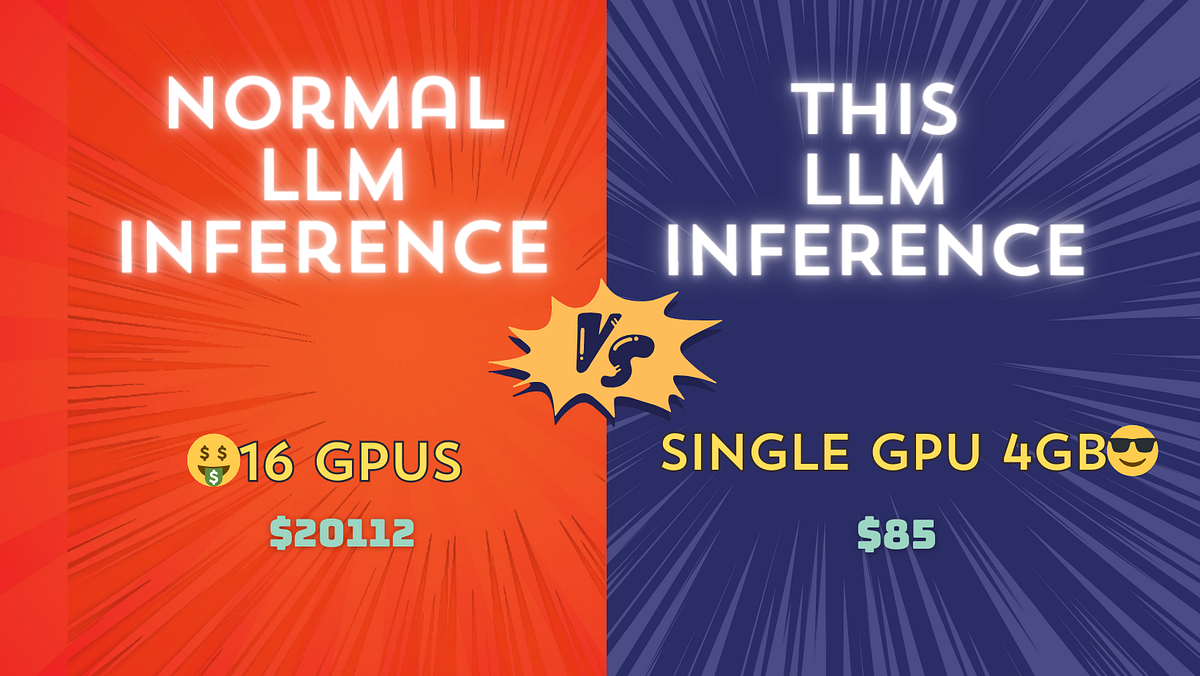

"This article discusses a new technique that allows people to run a 70 billion parameter large language model (LLM) on a single 4GB GPU. Traditionally, running such a large model requires a powerful cluster of GPUs or a Tensor Processing Unit (TPU). However, this new technique eliminates the need for expensive hardware, making it much more accessible and affordable.\n\nThe technique uses data sparsity and pruning to drastically reduce the number of calculations required to do inference on a LLM. As a result, the authors were able to fit a 70 billion parameter LLM into 4GB of RAM, and run inference on it in just a few seconds. This is a major improvement over traditional methods, which can take minutes or even hours to complete the same task.\n\nThe authors also outline some of the implications of their work. They suggest that their technique could be used to create smaller and faster models for natural language processing tasks, allowing them to be used on mobile devices or in other resource-constrained settings. Furthermore, the authors believe that their approach could enable the development of “super-intelligent” AI agents which are able to learn from experience and make decisions more quickly than ever before.\n\nOverall, this article provides an exciting glimpse at the potential of a new technique for running large language models on limited hardware. By drastically reducing the cost and time associated with training such models, this technique could revolutionize the field of natural language processing and help move us closer to achieving truly intelligent machines." # Description used for search engine.

Read more here: External Link