Fast and Expressive LLM Inference with RadixAttention and SGLang

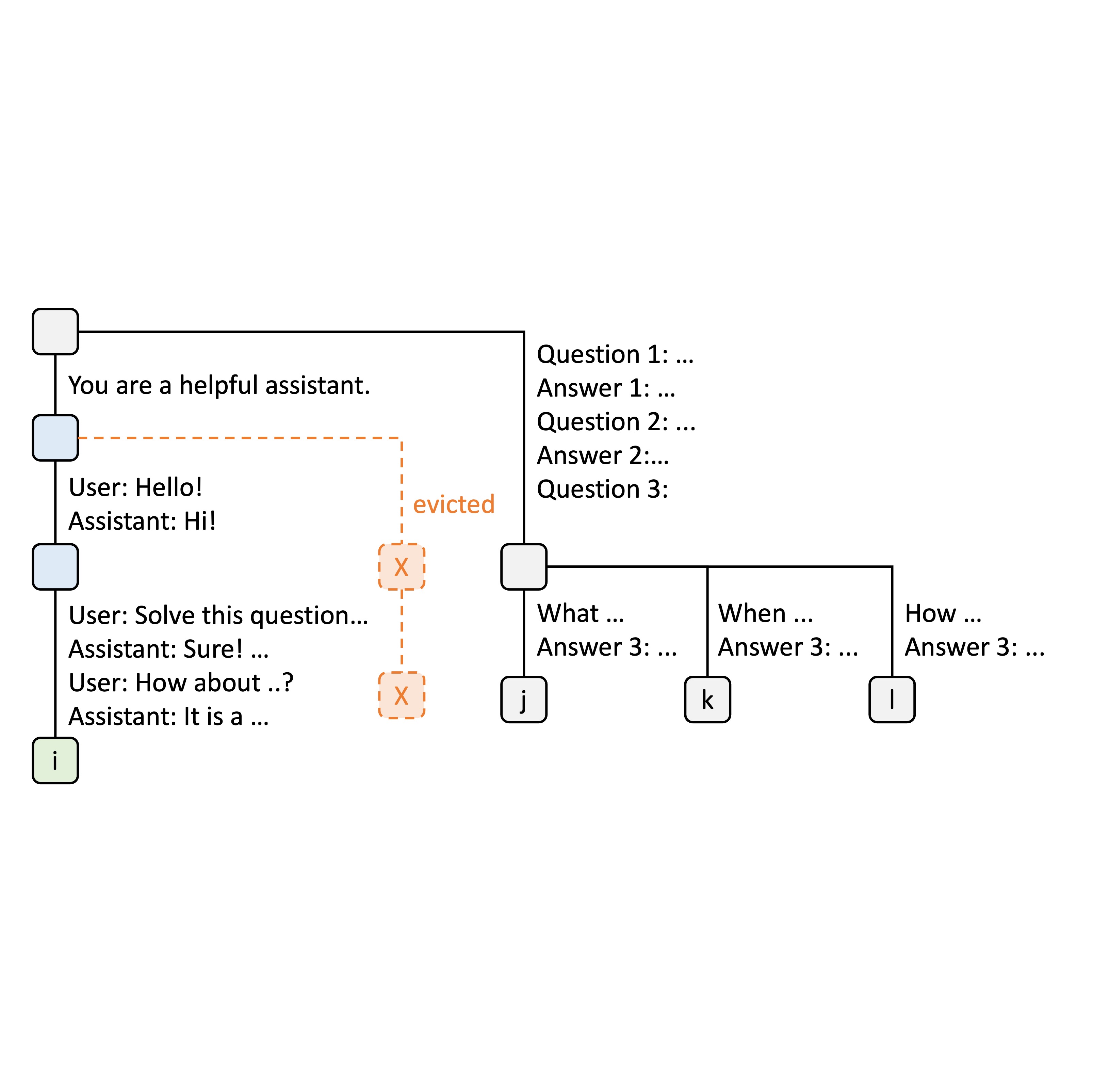

Large Language Models (LLMs) are increasingly utilized for complex tasks that require multiple chained generation calls, advanced prompting techniques, co...

Read more here: External Link