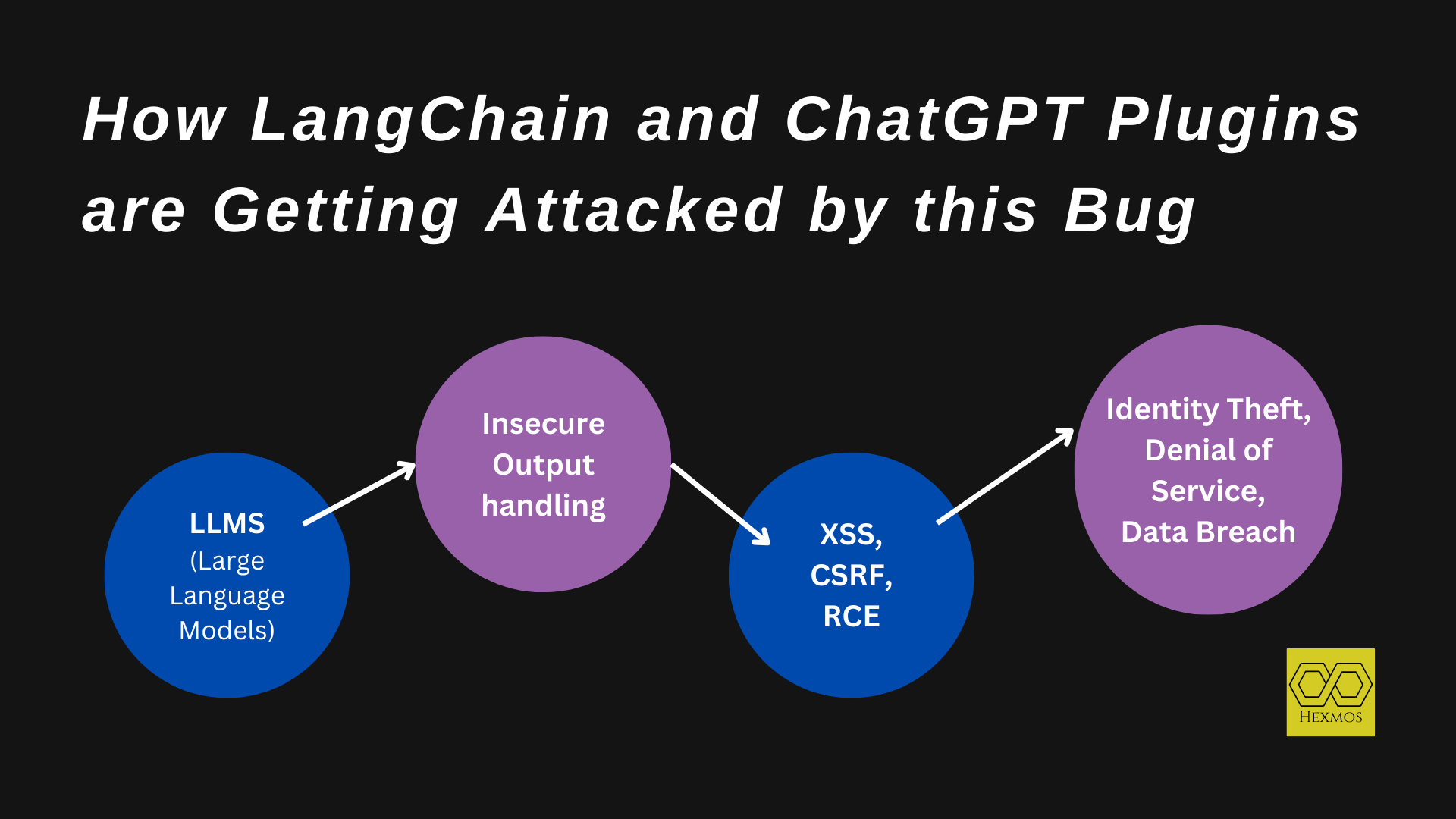

How LangChain and ChatGPT plugins are getting hacked by Insecure Output Handling

Insecure Output Handling on LLMs deals with injecting poisonous data during the training phase. In this article, we will be focusing on real-world scenarios, practical demos, and prevention mechanisms along with examples.

Read more here: External Link